“How do you choose which test cases to automate?”

Despite of all critique and challenges, automation is a valuable aspect of testing strategy. Every new automator needs to answer questions like that:

“How do you choose which test cases to automate?”

While everything regarding testing is very specific, we may try to give an answer through general idea supplemented with concrete examples.

Cross-posting my answer from Quora.

Preface

So a question was asked: How do you choose which test cases to automate? and details were requested: “provide examples of scenarios that you will NOT automate.”

Generally speaking, it only makes sense to automate if one counts on return of investment in your project (I leave purposes like training or cash burning aside).

So decision chain would go as following.

- Test results would be of value.

- There’s no faster or cheaper way to get the results than to use automation.

- Downsides of the automation won’t overweight the value of test results. Cons include implementation and maintenance costs, as well as reliability of the results.

If you answered “No” at any step then you don’t need automation.

Note: much of that decision making is rooted not in automation but in software testing in general. This topic (as well as many others) is greatly explored in Gerald Weinberg’s book “Perfect Software and Other Illusions about Testing”.

Strategic Examples

Exploratory tests vs. Repetitive tasks

- Testing assumes open-ended questioning and automation sucks at it. Use skilled humans for that.

- Mundane repetitive tasks, where 1–2–3 criteria allow for saying “yes”, are a good example for automation. But beware of Automation Problems – see below.

Beyond the GUI

- These days, many products don’t have a GUI, and may not have any UI.

- Judge by the availability of technology and tools. API fits well for automation. So does load / stress / performance testing.

- However, many problem can only be spotted with a good old human code review. Even if you’re not reading code, practice asking questions about code at feature walkthroughs. Programmers like to talk about their code and you can learn a great deal.

Human Interaction and User Experience

- In modern UI, interactions are highly variable, and include loops, interruptions, and crazy combinations of steps. Automation would stick only to a straight forward sequence which often misses many problems.

- Interaction includes perceiving and understanding information, as well as preferred ways to memorize and operate. Modern software should be accessible for people with disabilities, and automatic testing of that doesn’t do well (although it’s very helpful in checking of HTML patterns and anti-patterns, color/contrast checking, and so on).

- User Experience is a lot about how we feel about the product. Automation has nothing to do with that.

Security Scanning vs. Security Testing

- There’s a variety of tools that scan code for known security loopholes, and that automation is great.

- There are also tools that probe and scan an application in automatic way, and also discover vulnerabilities like JavaScript injection.

- But remember that new vulnerabilities appear nearly every day, and tools are lagging behind, because it takes humans to analyze threats, extract patterns, and implement the algorithm.

- Also remember, that a technical threat still needs skilled human investigation and framing as a problem for the business to consider fixing.

Beware: GUI Test Automation Problems

Often, the notion of test automation is reduced to a notion of GUI automation of functional test cases. Although very promising, GUI automation inherently bears a number of problems – and risks associated with them.

The main questions are Reliability (Robustness), Scalability, and Maintainability.

At some point, I wrote quite extensively about the subject: Requirements – Automation Beyond

Tactical Examples

What NOT to automate?

To give grassroots examples, I’m using the same application referenced in my answer about learning testing: Albert Gareev’s answer to How can I start learning about manual testing in a practical manner?

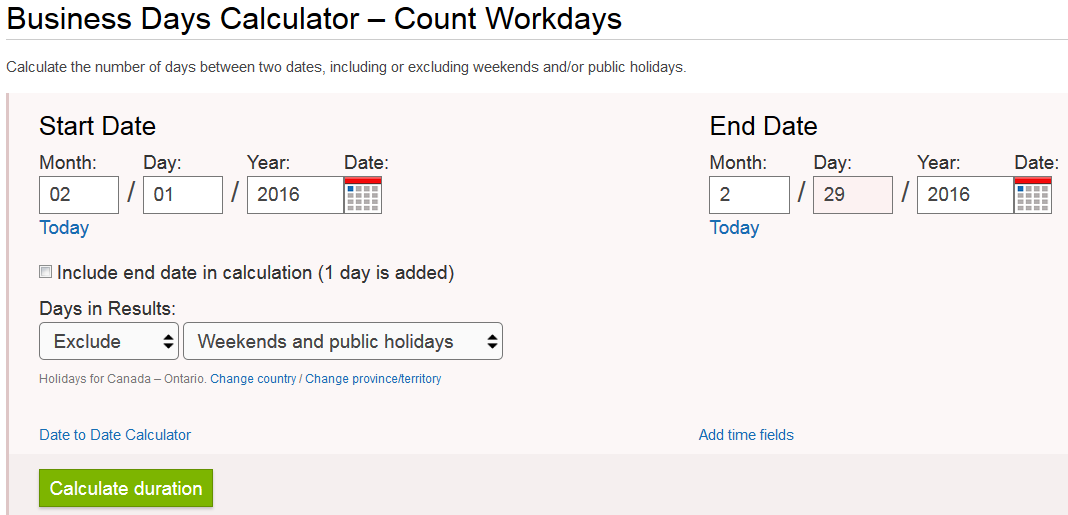

Here’s the app: Business Days Calculator – Count Workdays

Do NOT automate GUI verification

- Don’t bother to automate verification of presence/state/location/e

tc. of controls and static text. - To begin with, if the GUI is fluctuating because the screen is being designed, you’re in exploratory testing mode, looking for new problems, not automated checking of old and known.

- Constantly updating GUI recognition in scripts will cost much more than just testing with a pair of eyes.

- It’s very time-consuming and unreliable to try and “digitize” look-and-feel for verification. And even if you did –

- Scripts may check existing controls and text, what if something new appears? You can’t code for something you don’t know about yet.

- Finally, if GUI fluctuation is a known problem and a real risk – solve it, don’t accept it.

Do NOT automate high-maintenance cases for a long run

This is more about automation techniques than testing.

- Do NOT use hard-coded data for input and verification.

- Have a single script that can take all data combinations and permutations.

- Maintaining data sheet is much faster and easier than maintaining single coded script. Plus, any tester in your team could do that.

- Now consider that a single statement needs to be changed in the flow. Would you run change one script covering 50 cases or fifty scripts?

- Do NOT use hard-coded logic in automation.

- Look at the app. The whole flow includes inputs for Start Date, End Date, check-boxes and drop-downs. But there are also plenty of cases where only some controls can be populated.

- Here’s the thing: do NOT code multiple scripts for multiple scenarios.

- Code a data-driven script which will skip the fields if data parameters weren’t provided in the sheet.

- This way, you can cover all combinations and permutations just by varying your input data in the spreadsheet. One script + 100 data rows -> coverage for 100 of test cases is a normal ratio in efficient test automation.

- Tip: learn Functional Decomposition to code effective and efficient scripts.

Do NOT automate low risk and redundant coverage cases

This is more about testing, risk analysis, and test design techniques, rather than automation. However, many inexperienced automators create scripts just because they can, without consideration of risks, costs, and benefits.

- Do NOT automate submission of data just day by day or randomly. Have a system. Get handy with testing techniques like boundary values analysis, partitioning analysis, and so on.

- Select cases that would represent certain conditions: variation by conditions (28/29/30/31 days in a month, holidays, weekends, etc.), variation by result (zero days, 1 day, 100 days), going over week/month/year, past/future dates, and so on. You may have a little redundancy in coverage due to testing in One-Factor-At-a-Time mode but make sure you don’t miss important cases.

- Do NOT overly concentrate on [negative] cases for data rejection. Still cover those when invalid data may cause some damage if they make through. However (at least, in the context of this app!) mishandling valid numeric data (for date fields) bears potentially much more negative outcome than mishandling invalid non-numeric data for date fields.

****************

Image credits: Fallout game series.