QA Test Automation Requirements (1) – Usability

Original date: 14 Apr 2009, 1:30pm

Quality Assurance Functional/Regression Automated Testing and Test Automation Requirements: Usability, Maintainability, Scalability, and Robustness

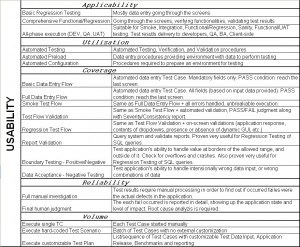

Usability Requirements Matrix 1

Applicability

– How would you want to use Automated Testing solution?

Basic Regression Testing

Implemented as a sequence of data entry steps going through the screens.

No or simplified verification based on hard-coded checkpoints.

Comprehensive Functional/Regression Testing

Thorough and robust automated testing. Test script is going through the screens, verifying functionalities, validating test results.

End-user can see high-level picture on the Test Report and make final judgment quickly. In case of need, though, Test Report provides all the details down to basic testing steps performed so that end-user can get the test flow reproduced easily.

All-phase execution (DEV, QA, UAT)

Suitable for Smoke, Integration, Functional/Regression, Sanity, Functional/UAT testing.

Test results delivery to developers, QA, BA, and Client-side.

While being comprehensive Functional/Regression Testing solution (with the same requirements as above), it needs to be implemented in a user-friendly and generic way, so that getting used to requires small learning curve; easy for non-technical user; specific for technical user.

Test Report must be transferable and embedded from the Testing Tool.

Dataset is flexible, easily maintainable and customizable.

Utilization

– Do you want to utilize the scripts for setup and configuration procedures, in addition to testing needs?

Automated Preload

Data entry procedures providing environment with data to perform testing. Could be also used for database testing, volume testing, and release comparison testing.

I also had experience successfully using automated data entry flow in Production for data migration type projects. However, this requires perfectly robust scripts, extremely careful planning through, and cooperation with other tech teams (to do a back-up, provide special access, disable certain production features, etc.).

Automated Configuration

All the setup and preparation procedures, like setting required configuration, creating/modifying options, etc.

Being properly built saves a lot of manual time, and gives a turnover advantage.

Coverage

– How thorough you want your automated testing to be? What kinds of testing you want to automate?

Basic Data Entry Flow

Automated data entry Test Case. Populating mandatory fields only. PASS condition: reach the last screen.

Similar to Sanity Testing performed manually.

Full Data Entry Flow

Automated data entry Test Case. Populating all fields (based on input data provided). PASS condition: reach the last screen.

Similar to simplified positive functional testing performed manually.

Smoke Test Flow

Same as Full Data Entry Flow + all errors handled and reported so that gives an unbreakable execution with high coverage advantage.

One of the very beneficial ways to use would be:

• prepare automated smoke/regression test plans;

• let developers launch them after the build / overnight deployment;

• have test results available for PM, QA, and Dev next morning.

Developers can catch and fix most obvious defects on-the-fly, without getting QA involved.

QA have more time to focus on failure analysis, and more time to spend on a manual complicated / ad-hock testing.

Test Flow Validation

Same as Smoke Test Flow + automated validation, PASS/FAIL judgment along with Severity/Consistency report.

More smart type of automated testing with validation (judgments) of fails implemented. Helps to quickly identify known defects, or create defect reports for the newly discovered ones.

Gives better time-saving advantage since manual validation operations are not necessary.

Regression Test Flow

Same as Test Flow Validation + on-screen validations (application response, contents of dropdowns, presence or absence of dynamic GUI, etc.).

While keeping robustness and validations features, provides additional testing functionalities (not directly related to the business logic flow) for Functional/Regression testing needs.

However, building additional verification & validation requires stronger business logic support by Subject Matter Expert, and extra data build-up / maintenance efforts.

Report Validation

Query system by submitting user requests through the front-end and validate reports.

“Gray Box” testing of stored procedures implementing SQL queries could be very effectively covered with automated testing scripts. In addition, queries may cover boundary and overflow conditions.

Positive/Negative Boundary Testing and Data Acceptance

Test application’s ability to handle value at borders of the allowed range, and outside of it. Check for overflows and crashes.

As Negative Testing, validate application’s response on intentionally invalid data input, or wrong combinations of data, or user actions.

Complexity and coverage of this automated testing type is nearly as thorough as a manual ad-hock testing. However, reasonable limit of test flows to automate should be set (risk assessment based on impact), to avoid code maintenance issues.

Reliability

– How reliable test results must be? Can testers trust test results even without manually reproducing the case or failure only means that “something went wrong” and needs to be investigated manually?

Full manual investigation

Test results require manual processing in order to find out if occurred FAILs were the actual defects in the application. Manual processing means manual re-testing of Test Case failed in order to find a reason of failure.

Final human judgment

The each fail occurred is reported in detail, showing up the application state and level of impact. No need to reproduce Test Case manually.

Known fails are analyzed and reported by the script.

Root cause analysis is performed by human.

Volume

– How many automated Test Cases you want to use and how would you want it to be organized?

Execute single TC

Each Test Case script started manually.

This is still more manual rather than automated testing.

Execute hard-coded Test Scenario

According to Test Plan, developer creates batch of Test Case scripts. Batch file performs execution calls in a defined sequence, Test Case scripts used “as is”. That means, individual configuration/customization of Test Case scripts mist be performed prior to the batch execution.

In case of multi-environment or multi-dataset test requirements batch execution is less convenient due to manual maintenance efforts required.

Execute customizable Test Plan

List/sequence of Test Cases with customizable Test Data Input, Application Release, Benchmarks, Reporting, etc.

End-user (BA, QA) can build / modify Test Plans using previously automated Test Cases available; no programming is required.

Test Driver script performs automatic analysis and invocation of Test Cases scheduled, passing user-defined high-level input, like Application / URL to use, and Test Data set. Using customizable keys Tester can set Test Plan benchmarks and control automated test flow at the Test Plan level.

Built and baselined Test Plans can be successfully used by Dev, BA, and non-technical Jr.QA staff.

Overview of Usability requirements will be continued with Transparency, Operation, Design, and Swiftness.