WTA05: Test the Envisioned

This is my second post on the testing challenge.

When I was thinking of the main idea of the challenge, I thought of it as “testing the envisioned product”. Yet, while preparing for today’s post, I wanted to reference one of Michael Bolton‘s articles, about testing in retrospective, and the idea of name “testing in prospective” popped up. I googled it – only to find that both “Prospective Testing” and “Inspective Testing” have been coined already..

Prospective Testing vs. Inspective Testing

Actually, this is title of a blog post, made by Antony Marcano who attributes these terms to the results of a lengthy discussion he had with James Bach.

So, as defined…

“Inspective Testing” helps us to understand more about the product we’ve built.

“Prospective Testing” helps us to understand more about what we expect from the product we are about to build.

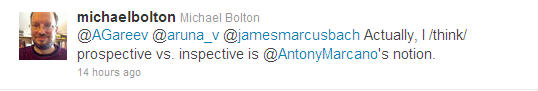

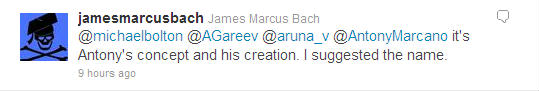

Update: clarification by Michael Bolton and James Bach on Twitter

And how “Prospective Testing vs. Inspective Testing” relates back to the challenge?

– We had a product representing (as a prototype) another product.

And such testing was hard. Hard in terms of the right testing approach. Hard in terms of finding problems that will really bother the true customer.

For sure, context-revealing questions (again, Michael Bolton, Developsense) help.

Who is the customer of the product?

How is this one expected to be the same or different from the other ones?

Here’s what I’m thinking right now. What else might be true? What if the opposite were true?

What’s hard is to constantly keep all these questions in your head. Or, at least, to raise them quite often while testing…

Now, back to the testing of a product representing another product.

Isn’t it the same as we have with “real” applications? Page, spreadsheet, dashboard, chat,… If we test a product intended to replace some original product or object, shouldn’t we look at the value provided by the origin, and see if a replicate can provide the same or better value? Or see if a customer (user of the product) can [easier] get the same result without using our product or by using competitor’s product.

What I can see, looking from this angle, is that many [software] products are designed as a functional replication of what’s the origin is doing without understanding what customers really need! Then (surprise, surprise!), a product with only “minor” functional defects is rejected because it has no value.

If not testers then who?

In the part one I made a point about layered code: while using high-level functions on the top of the hierarchy programmers may have no idea what’s actually happening underneath. Even more, the behavior is not exactly the same every time.

Nowadays, development components, these “LEGO” building blocks for programmers, are the products themselves. If we leave testing of such products to programmers we, probably, end up with a good set of checks? But do these checks consider value for the customer or they only confirm that functions return the same numbers?

Is it encoded in checks how a customer will be using the code modules? Who will be the customer? Are there any threats to the value of a product?

(That brings us back to the approach of prospective testing.)

But this is also a question – should testers know programming and to what degree?

And how testers should use their knowledge? Should they be involved in code reviews and writing automated checks to ensure the functionalities are working or should they use their programming skills to test the implemented functionalities?

Note, that now many code components are sold as “requiring minimal programming skills”, as prospective customers are not necessary professional programmers.

It’s closer than you think!

Some time ago I blogged about an example of how non-programmers / non-testers used a programmable product with the result of causing sickness to people (radiation overdose).

Nowadays, many electrical and mechanical devices can be enhanced by a programmable control units. That includes house circuits, automotive components, medical and office equipment (I’m not even talking about gadgets and electronic devices that already come programmable). They can be programmed and re-programmed by non-programmers who are the end-users.

When end-users install a program, they typically have to agree on “given “as is” and “no liability presumed”. Are customers of the end-users aware of such agreements?

4 responses to "WTA05: Test the Envisioned"

Nice post. I recently attended a presenation for medical equipments testing and can see how this would be something we should be watching for. Its not like we can miss a few scenarios because we didn’t know it from our inspection of the product. I think the importance of the prospective testing goes up as the risk to the end user goes up. Banking, health, flight navigation system, etc we would have to have a deeper understanding.

[ Albert’s reply.

Hi Shilpa,

Exactly, and we can also say – this is true Black Box testing. We don’t care about functionalities encoded, we look if the product is capable of performing functions needed by the client.

Thanks. ]

Hi,

Very informative article. Its quite true that prospective testing should be done with 2 questions in our minds

1) How is the product different?

2) How does it create better value for customers.

[ Albert’s reply.

Thanks for your comment.

I see a little bit of a mix-up in your response, so let me restate.

“Prospective Testing vs. Inspective Testing” was defined by James Bach. If you haven’t done so yet, make sure his works are in the top of your list.

“Context-revealing questions” greatly help to stay focused on the real (important for customers) problems. Again, to the top of your list: Michael Bolton, Developsense.com. ]

Usually we focus only on functional defects whether the method works as expected or whether the function returns proper value.

And this is the problem. That’s not testing, that’s checking. If testers still catch unit level bugs they should question quality of TDD on the project.

Prospective testing approach defined is this article is indeed the best approach for testing new products yet to be built.

Thanks for this insightful article.

Regards,

Aruna

http://technologyandleadership.com/

Thanks for the response. Will definitely lookup the mentioned websites for more on context-revealing and prospective testing.

BTW improving TDD is a good suggestion to reduce the number of functional defects.

Regards,

Aruna

http://technologyandleadership.com/

“The intersection of Technology and Leadership”

Albert, thanks for writing this. Very compelling and helped me see the session in a slightly different light.

As you remember, we had some who were looking at the application as a game, and others who were looking at it as a simulation of a real life robot performing tasks. The context of the situation helped inform the testing requirements and expected outcomes; those who tested it as a game had a lot of questions related to the playability and effectiveness of completing the tasks from a game play perspective.

On the other hand, those who approached it as a simulation for a real robot had very different reactions and understanding of the functionality. Different focus makes for different understanding and different test approaches.

[ Albert’s reply.

Michael, it really is exciting to see how things make turn in unexpected directions. We came up with such a small testing project (time-wise), put an effort making the mission as clear as possible, yet we missed how it can be misunderstood. Although “prospective testing” was the main learning goal of the mission, we also witnessed and learned from appearance of a black swan.

Thanks ]