QA Test Automation Requirements (3) – Robustness

Original date: 28 Apr 2009, 1:00pm

Quality Assurance Functional/Regression Automated Testing and Test Automation Requirements: Usability, Maintainability, Scalability, and Robustness.

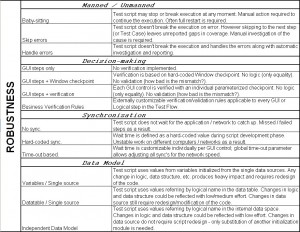

Robustness Requirements Matrix

Manned / Unmanned Test Execution

– What level of manual efforts in automated testing would be acceptable for you?

Baby-sitting

Test script may stop or break execution at any moment. Manual action required to continue the execution. Often full restart is required.

This is typical for all the scripts created using any variation of Record/Playback method. Furthermore, using of standard GUI functions without prior GUI verification and synchronization gives similar problems.

Skip errors

Test script doesn’t break the execution on error. However skipping to the next step (or Test Case) leaves unreported gaps in coverage. Manual investigation of the cause is required.

Test Plan wise execution is a quite robust because one failed Test Case doesn’t stop the whole sequence. However, at Test Case level such an implementation seriously reduces coverage. For long flow test cases visiting 5 and more screens exit on the first error occurred at the first screen means leaving over 50% of Test Case uncovered.

While testing manually, minor fail requires documentation but doesn’t require stopping of testing activity on the screen.

Handle errors

Test script doesn’t break the execution and handles the errors along with automatic investigation and reporting.

Implementation should include catching internal errors and Test Flow exceptions (pop-up dialogs and other unexpected events interrupting the normal flow), recognition of the problem, investigation and recovery steps along with the detailed reporting.

Manual testing example.

1) Select payment currency USD (dropdown listbox).

2) Select payment type WIRE (dropdown listbox).

3) Press “Make Payment” (button).

4) Verify payment details on the Confirmation screen.

Possible fails at step (1)

1A. Failed to find dropdown. Severity – High. Can continue testing? Yes. Report absence of the object.

1B. Failed to use dropdown because it’s disabled. Severity – Medium. Can continue testing? Yes. Report state of the object.

1C. Failed to select item USD in the list. Severity – Medium. Check other items available (CAD, AUD). Report absence of required item and other items available. Can continue testing? Yes.

Possible fails at step (2)

2A. Failed to find dropdown. Severity – High. Can continue testing? Yes. Report absence of the object.

2B. Failed to use dropdown because it’s disabled. Severity – Medium. Can continue testing? Yes. Report state of the object.

2C. Failed to select item WIRE in the list. Severity – Medium. Check other items available (CASH, CHEQUE). Report absence of required item and other items available. Can continue testing? Yes.

Possible fails at step (3)

3A. Failed to find button. Severity – ShowStopper. Can continue testing? No. Report absence of the object.

3B. Failed to press button. Severity – ShowStopper. Can continue testing? No. Report state of the object.

At step 4 (as well as before going into step 1), absence of screen (Window, Dialog, or Web-page) is a ShowStopper fail for the script.

Now what if script exits at the first fail encountered? Even with 4 simple steps, we have coverage reduced to 20% of a possible one when same testing is done manually!

Automated Decision-making

– Does the script only GUI input, or verification, logical judgment, test status evaluation, and overall validation of the Test Case?

GUI steps only

With no verification implemented, GUI data entry has low relevance to testing.

GUI steps + Window checkpoint

Verification is based on hard-coded Window checkpoint. No logic (only equality). No validation (how bad is the mismatch?).

It used to be very popular and seemed to be good workaround reaching detailed level of verification without implementing verification logic. However, huge data maintenance effort popped-up very quickly as every single data change causes checkpoint to fail. And the validation of test results still had to be performed manually which for complicated checkpoints is comparable to the manual testing time of the application itself. Another problem has been revealed through a static nature of this verification type. Functionalities, based on the on-screen application response or dynamically changing data could not be verified as a hard-coded checkpoint.

GUI steps + verification

Each GUI control is verified with an individual parameterized checkpoint. No logic (only equality). No validation (how bad is the mismatch?).

Splitting one consolidated window checkpoint into individual GUI control checkpoints allowed reducing maintenance efforts. Some tools (like QTP) allow parameterization of expected result which improves maintainability even more. However, verification is still based on “equal or not” rule only, while business requirements may say “equal with rounding”. “greater/less than”, or even “includes / included”. And validation of comparison is still performed manually.

Business Verification Rules

Externally customizable verification/validation rules applicable to every GUI or Logical step in the Test Flow.

To reach this level of convenience, Hybrid or Model based Framework should be in place. In addition, the Framework should have a user-friendly “front-end” allowing design and customization of verifications in the Test Flow without programming.

Synchronization

– Do you want an automated Test Flow to be successfully running on different computers, different networks and environments?

No sync.

Test script does not wait for the application / network to catch up. Missed / failed steps as a result. Modern testing tools are trying to minimize fails by setting an overall timeout applied to the all GUI steps by default. When synchronization time fits the default timeout (usually 30-60 seconds) the problem is resolved. However, the major steps (login, submitting report, creating new record) may require significantly longer waiting time affected by the network speed and server performance.

Hard-coded sync.

Wait time is defined as a hard-coded value during script development phase. Unstable work on different computers / networks as a result.

Example.

During script development phase, it was taking about 3 seconds for the report window to appear. “Wait (5)” instruction was put giving 5 seconds for the window to appear. However, during test execution phase certain reports required much longer time to be generated and all that test cases were failed by the script.

Time-out based

Wait time is customizable individually per GUI step; global time-out parameter allows adjusting all sync’s for the network speed.

Most of Testing Tools allow using various synchronization statements. However, that code is not generated automatically during recording. It must be set programmatically before GUI instruction or as a part of wrapping custom GUI function.

The difference between “Wait(30)” and “Sync(30)” is in an exit condition. In the first case, the script will just wait doing nothing for 30 seconds.

In the second case, the script will be checking the GUI to become available for the period of up to 30 seconds.

Data Model

– How flexible Test Automation Framework is to support test data of a various, customizable volume, and to be quickly adjustable for the new test requirements?

The general difference is in the automation approach. In “manual automation” every manual test case is automated individually, which gives convenience for “automation developer” but creates numerous duplications in code and data, which produces a great inconvenience using and maintaining such scripts. In Test Flow Automation multiple test cases using same screens and having same major PASS/FAIL conditions are covered with single script with test data controlling the flow (data-driven).

Variables / Single source

Test script uses values from variables initialized from the single data sources. Any change in logic, data structure, etc. produces heavy impact and requires redesign of the code.

Datatable / Single source

Test script uses values referring by logical name in the data table imported from a defined source (external data spreadsheet). Changes in logic and data structure could be reflected with low/medium effort. Changes in data source still require redesign/modification of the code.

Independent Data Model

Test script uses values referring by logical name in the internal data space. Changes in logic and data structure could be reflected with low effort. Changes in data source do not require script redesign – only substitution of another initialization module is needed.