Methods and Tools for Data-Driven API Testing

This article was published on StickyMinds –

Methods and Tools for Data-Driven API Testing, September, 2017.

Software testing has many forms and breeds, but one major distinction has always been based on the approach—either working with the code or interacting with the product. The former was typically a prerogative of programmers while testers have concerned themselves with the latter. The industry even came up with the famous analogies of glass-box testing and black-box testing, meaning that you either see what’s going on inside the box or you have no idea, observing only input and output.

However, modern software systems are boxes within boxes within boxes ad infinitum. Programmers use third-party libraries without knowledge of their code. Products interact with each other through integration protocols. At the same time, testing feedback is needed way before a product gets a user interface. Some products, like internet of things devices and wearables, do not have a UI at all.

While programmers take responsibility for more testing, testers may want to increase their value by becoming more technical. This includes using developer tools and learning to do a little coding on their own in order to test programs at the API level.

A Quick Introduction to API Testing

An application program interface, or API, is a set of rules specifying interaction protocols between software “boxes.” API testing is a form of black-box testing—i.e., interacting with a function without knowing what’s going on inside, through feeding inputs and evaluating outputs.

Internet applications use web services APIs in the form of either SOAP (Simple Object Access Protocol) or REST (Representational State Transfer). There are technical and architectural differences between these two communication methods that would be of interest to programmers, but they can remain within the black box for testers. In terms of input-output, both protocols use text-based format; SOAP uses XML notation and REST uses JSON notation. Both formats are easy to learn. Testers need to be able to read and compose them in order to prepare test inputs and evaluate outputs.

Imagine a web form with data fields First Name, Last Name, and Date of Birth. Instead of manually typing an input, the tester would need to prepare data snippets, like below.

Data snippet in XML:

<firstname>John</ firstname>

<lastname>Smith</lastname>

<dob>1980/06/04</dob>

Data snippet in JSON:

{

“firstname”: “John”,

“lastname”: “Smith”,

“dob”: “1980/06/04”

}

As you can see, the examples are easy to get started with.

Methods and Tools for API Testing

There are a few ways to perform API testing.

At the simplest, you can just put the web service URI—that is, the path and parameters—into the browser address line, and the response will be displayed within the browser window. There are basic and sophisticated add-ons available for free for all popular browsers, and there are free and commercial standalone tools. Finally, there are automation frameworks supporting different languages.

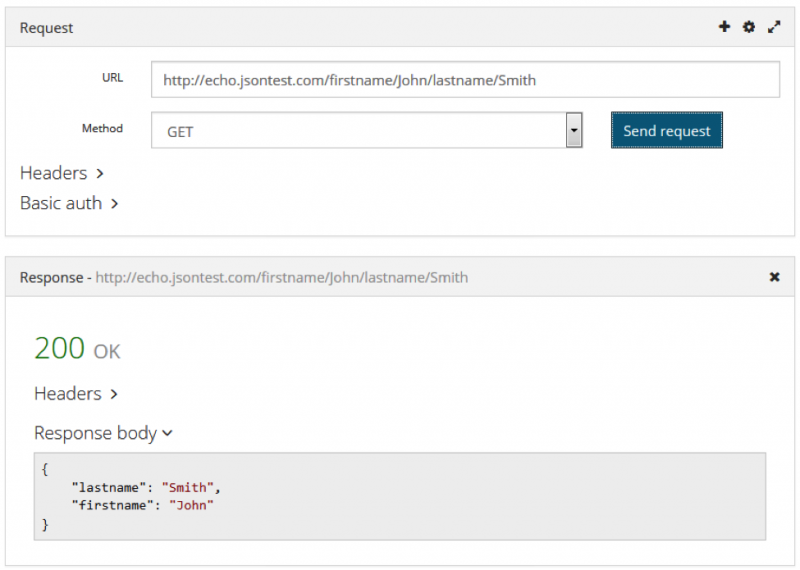

Here’s an example using the RESTED Firefox add-on to place a request and review the response from the free online service JSON Test.

Each method has its value. Quite often, there’s a need for a few tests to be done quickly. They don’t need to be repeated, so simple methods and tools are right on. If there’s a need for continuous testing after each code change and deployment, advanced tools and automation suites fit better here.

The common denominator is risks. All testing is guided by explicit or implicit risk assessment. Risks assess the likelihood of something unwanted or negatively impacting the product due to a direct or indirect result of some code changes or build and promotion issues.

The most basic question about an API component, like a web service, would be, is it up and running? That question can be answered by making just one API call. If answered positively, developers may ask, does it properly handle correct and incorrect input and return the expected results? These questions might be further refined case by case with different data combinations.

Test Design and Execution

Test design is about answering these questions. We design and simulate various interactions with an API to explore and investigate its behavior.

If we organize our test questions from basic to deep coverage, we will end up with something like the following categories.

Availability

Checking whether a particular API function (or a few of them) is down is the most basic question. That can be answered with a single API call.

Functionality

Checking whether a sequence of API calls intended to work in a scenario is unavailable or behaving incorrectly in some way would be considered shallow but broad coverage.

One example is continuously scrolling a map while the app visualizes points of interest around your point of view. This operation is simulated by a sequence of API calls feeding a geolocation.

Another example would be sending a search request in an online store, reviewing search results, and retrieving details of a found item. This operation is simulated by a sequence of API calls where data retrieved from the previous call might be used as parameters for the next one (for example, “item id”).

Broad coverage also involves checking whether typical calls, such as valid data or a generic response, yield an invalid response or is incorrect in some way.

Input parameters

Input is what is sent into an API function when the call is made. Testing from the input perspective requires covering all variations of data input, including all equivalence classes of valid input and invalid input.

For example, boundary testing for a numeric field would include testing at the min boundary, one lower than min, way lower than min, one higher than min, one lower than max, at the max boundary, one higher than max, and as much as possible higher than max.

You also would include data variations to test valid and invalid format, precision, encoding, and any other applicable business rules. For example, for a web service “GetUserByID” with “ID” as a parameter, a thorough testing would require a sequence of calls like the following:

- Provide a legitimate ID

- Provide no ID (null input)

- Provide a non-numeric ID

- Provide a valid but nonexistant ID

- Provide a legitimate ID with the lowest number available

- Provide a legitimate ID with the highest number available

- Provide an ID with heading or trailing spaces

Output parameters

Output is data that were returned from the API call. Data may come in different forms, like response code, response header, and response body returned by a web service.

Testing the output aspects requires reproducing all variations of output. To provoke a desired output, you need to know what to request. A peek into a database would be of great help, as you can find and inject peculiar field values there. For web services, you have to reproduce all intended HTTP response codes.

Whenever a data set is returned, reproduce cases like none, one, and max. For example, consider an online store returning a list of matching items. There could be none, or just one, or the returned data set may exceed the maximum number that can be displayed on one page—or the returned data set may be so big that it will slow down or crash the front-end.

System state

Have you seen things like “most popular article” or “most searched merchandise”? As customers use software, certain statistics are collected that affects the behavior of the system. There are also other effects on the system state, including memory usage and possible leakage, as well as filling in and overflow of buffers and queues.

To test this aspect, reproduce all intended consequences and investigate if there are unintended ones.

Flow testing

A special testing aspect is simulating continuous usage of the API. Imagine users constantly searching for merchandise in that online store. The intent of such a simulation is not a verification of something particular, but rather observing if the software responds consistently over time or begins to manifest different errors.

Flow testing also includes simulation of timed-out and interrupted calls. If your system has functionalities for rejection and rollback of transactions, make sure to reproduce them, and verify what happens with data records. For example, let’s say you’re booking a movie ticket online. Typically, it’s a sequence of screens for movie selection, date and time selection, seat selection, and payment. As soon as seats are selected, they become reserved. But what if a payment was never made or didn’t come through? How many seats will be lost due to a fake or failed booking? To manage that problem, the booking sequence has a timeout. If the final call doesn’t succeed, all reservations should be released, and the customer should not be billed. While testing through the API, make sure to reproduce situations like this and investigate how the system handles a timeout and performs a rollback.

Another aspect is testing with a high volume of data in input and output. That might be a request for an unusually large data record or set of records, or continuous throughput of data. This is similar to testing the system state, but the focus is on stressing amounts of data that the system should be able to handle.

Structure Your API Testing Needs

The lists of test design ideas above might look frighteningly long, but that’s because we reviewed very broad examples aiming for many kinds of risks. In practice, your level of coverage probably will be more constrained and specifically guided.

It is wise to test more important functions sooner and to spend more time on crucial aspects of a product. That calls for having a structured model and for having risks and priorities identified and monitored as the software development project unfolds.

Data-driven API testing can enable feedback much sooner and more often during development while being just as comprehensive as classic functional black-box testing. Testers looking to diversify their skills should consider learning some coding in order to test their programs at the API level.