“What is performance testing?”

Another day, another good question on Quora.

For years, I’ve been answering “what is performance testing?” in a variety of ways. In a technical way, I tell about process, tools, scripts, measurements, and analysis. More often though, I need to convey the concept to a non-technical or at least not very technical person. Finding a good analogy is the key.

When it comes to load, performance, stress, endurance related aspects, road traffic analogy served me best.

—

Computers process data.

- See these cars? Data travel back and forth within computers and through computer networks in a similar albeit more complicated way.

- See these lanes and intersections? They represent rules and constraints of data traffic.

A software performs well if it can sustain data traffic without problems.

When software performs well, users experience seamless interaction, and their data are not at risk of getting lost or corrupt.

Performance testing engineers explore behavior of the software product and its infrastructure. And they also actively work with business and technical stakeholders to figure out what would be acceptable performance parameters.

Volume, concurrency, bottlenecks..

Let’s look at the road traffic again and see what applies to software.

How many cars goes through constitutes load on the road. The total amount of load in a given time interval would be volume.

A 100 meters long segment of a road can be concurrently occupied by 10 or more vehicles. But notice the difference in speed when there’s just a few of them or when they’re going bumper to bumper.

Software, especially server parts, concurrently process requests from a load of users. This includes data traffic and server resources – as cars allocate space on the road, for each connected user computer memory and other resources have to be allocated.

Notice that simultaneously on any given section there might be only as many cars as the lanes. And if number of lanes would vary it’s the sections with least number of simultaneously bypassing cars that will greatly affect the speed of the traffic.

As there are road traffic bottlenecks, there are data traffic bottlenecks.

So now you know some of the jargon of performance testing engineers. These and other parameters are to be identified, measured, and analyzed in order to map them to points where software users may experience problems or slowdowns.

Performance testing process: modeling, simulation, analysis

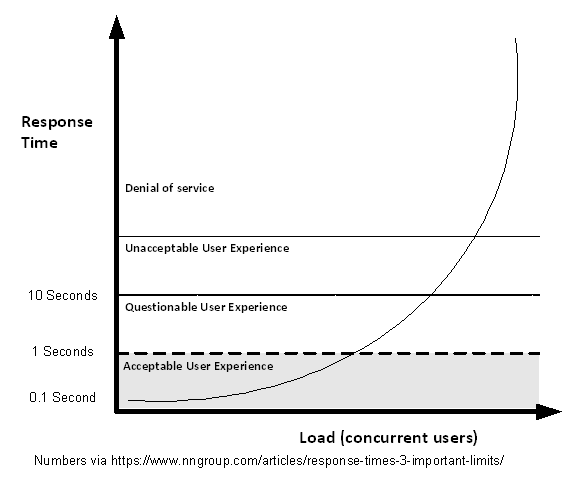

Let’s look at the graph above.

Response time is the most visible and obvious characteristic of software performance. However, as a result of mis-handled load software may also lose and / or corrupt user data.

Another counter-intuitive aspect is the distribution of user experience. It is possible that during the same period of time some users will be getting 1 second response while other may experience session timeout. Remember bottlenecks? Different user workflow scenarios may experience different bottlenecks.

Modeling

A big part of skilled performance testing is load modeling.

Many teams decide to put together a “test bed” of servers and network infrastructure, develop some scripts simulating user requests, run the whole thing against the application, and see if they can satisfy the business requirements. This approach only seems to be working sometimes, meaning it’s failing the other times. And explaining why it works or fails is equally hard.

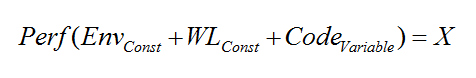

Let’s take a closer look at the parts constituting the “performance equation”:

Where

- “Env” stands for Test Environment (network, switches, load balancers, servers, database, and platform software);

- “WL” stands for Workload scripts simulating user activity;

- “Code” stands for the Application code, including both in-house components and third-party libraries;

- And “X” is the set of performance characteristics (response time, throughput, etc.).

The testing environment’s hardware and software have a multi-faceted impact on the application’s performance. While the risks of testing on significantly different hardware are readily seen, other differences might not be so obvious. Server settings, memory allocation, and process priorities are the first to consider.

Workload models are often based on service-level agreements and business requirements. Some common examples include the number of transactions, number of concurrent users, and response times. “Straight forward” models often miss aspect like simultaneous requests illustrated with road traffic bottlenecks. While one may argue that it’s unlikely that a few hundreds of concurrent users will simultaneously press “Submit,” what are the chances that ten would occasionally coincide?

Simulation

While good performance testing tool allows gathering of many parameters during the load, testers also keep an eye of server stats. That is, if they want to spot problems like

- High memory usage and memory leaks

- High CPU usage

- Allocation and release of resources

- Disk space

..and many other.

Application Server may have problems different from DB Server.

Analysis

Results of a load session rarely answer the binary “pass/fail” question. Performance testing engineers carefully analyze the errors and distribution, match it with the user scenarios, and prepare a compelling report. It’s a skill in itself.